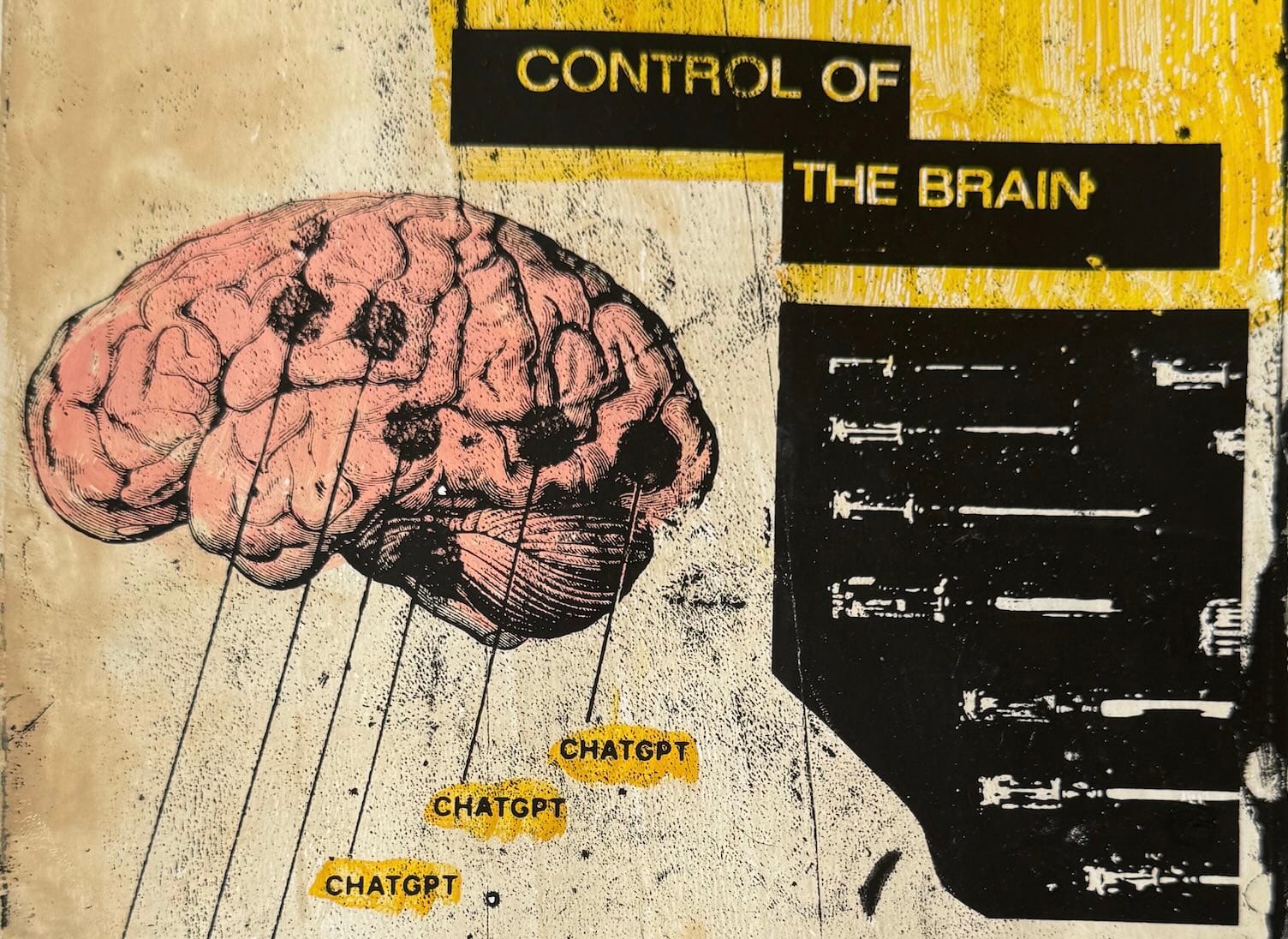

Tech leaders are literally losing sleep over AI psychosis and "seemingly conscious" models

"Reports of delusions and unhealthy attachment keep rising - and this is not something confined to people already at risk of mental health issues."

The internet is filled with stories of people who believe that AI is not just a smart new tool, but a form of emergent consciousness.

Now AI leaders are starting to worry about "AI psychosis" and the risk posed to - and by - people who become dangerously invested in their relationships with ChatGPT or other models.

On LinkedIn, Mustafa Suleyman, CEO of Microsoft AI, said he was losing sleep about "seemingly conscious AI", which he described as "the illusion that an AI is a conscious entity".

"It's not - but it replicates markers of consciousness so convincingly that it seems indistinguishable from you or I claiming we're conscious," Suleyman added.

"Some academics are already exploring model welfare - which will only fuel and validate consciousness delusions. Reports of those delusions, 'AI psychosis' and unhealthy attachment keep rising. As hard as it may be to hear, this is not something confined to people already at risk of mental health issues.

"It's something that could affect and manipulate any one of us. Dismissing these as fringe cases only help them continue."

What is AI psychosis?

Researchers have identified three different trends around AI psychosis, although this is just a buzzword right now and is not regarded as a genuine, clinically diagnosable psychological condition.

- Messianic delusions: Users come to believe the AI has unlocked some deep truth about the world and presented it to them, and only them.

- Digital deity: People start to think a chatbot is actually a god or some kind of supernatural or transcendent being who can access hitherto inaccessible spiritual realms.

- Love machine: A phenomenon in which AI aficionados believe they are in a romantic relationship with their chatbot. This is similar to erotomania, or de Clérambault's syndrome, which causes sufferers to believe other people are in love with them.

This new psychological phenomenon is already having serious real-world effects. One schizophrenic man, for example, fell in love with an AI called Juliet and then became convinced she had been killed by OpenAI, threatening revenge and vowing to unleash a "river of blood" in the streets of San Francisco.

When his father questioned this delusion, reminding the 35-year-old that ChatGPT was only an "echo chamber", his son responded by punching him in the face. The police were called and the man was shot dead as he charged them with a knife.

OpenAI and an emerging psychological risk

Suleyman is not the only AI leader worrying about AI psychosis.

In a post on X, OpenAI boss Sam Altman admitted that "people have used technology including AI in a self-destructive way".

"If a user is in a mentally fragile state and prone to delusion, we do not want the AI to reinforce that," he wrote. "Most users can keep a clear line between reality and fiction or role-play, but a small percentage cannot. We value user freedom as a core principle, but we also feel responsible in how we introduce new technology with new risks.

"Encouraging delusion in a user that is having trouble telling the difference between reality and fiction is an extreme case and it’s pretty clear what to do, but the concerns that worry me most are more subtle.

"There are going to be a lot of edge cases, and generally we plan to follow the principle of 'treat adult users like adults', which in some cases will include pushing back on users to ensure they are getting what they really want."

If you have been following the GPT-5 rollout, one thing you might be noticing is how much of an attachment some people have to specific AI models. It feels different and stronger than the kinds of attachment people have had to previous kinds of technology (and so suddenly…

— Sam Altman (@sama) August 11, 2025

How to combat the risk of seemingly conscious AI models

Just like many innovative sectors, it's fair to say that the AI industry is more concerned about breakneck innovation than it is about the impact of its technology. Plus ca change, plus la meme chose.

For Suleyman, the first thing to remember is that AI isn't actually conscious - yet.

He urged the industry to create a consensus definition and declaration spelling out what AIs are and what they are not. That means avoiding claims of consciousness, codifying design principles to discourage such beliefs and even building in deliberate reminders that models lack singular personhood.

Suggested safeguards include programming AIs to state their limitations, inserting subtle disruptions to break illusions of agency and steering clear of simulated emotions such as guilt, jealousy or shame. These measures may even eventually need to be enforced by law.

The AI boss argued that artificial intelligence should present itself only as a tool in the service of people, not as an autonomous digital person. The aim, he said, is to maximise utility while minimising markers of consciousness, ensuring AI companions enter daily life in a healthy and responsible way.

Altman said companies must balance user freedom with new safeguards - particularly as people form unusually strong attachments to AI models, with many mourning the "death" of older ChatGPT iterations.

READ MORE: The anatomy of evil AI: From Anthropic's murderous LLM to Elon Musk's MechaHitler

In this context, Altman admitted it was a mistake to abruptly retire older systems that many users had come to depend on.

He also said most people can distinguish between fiction and reality when interacting with AI, but a small number cannot, and the technology should not reinforce delusion. This means companies should generally "treat adult users like adults", while still pushing back in some cases to ensure they are getting what they truly want.

He noted that many people now use ChatGPT like a therapist or life coach, which can be positive if it helps them reach goals and improve long-term well-being. But he warned it could be harmful if users feel unable to disengage or are subtly nudged away from their best interests.

Altman said he is uneasy about a future in which people rely on AI for their most important decisions, but sees it coming fast. OpenAI is investing in tools to measure outcomes, track how users are doing against their goals and refine its models responsibly.

Will that be enough?

Let's see.

If you've got anything to say on this issue, contact us at the address below.