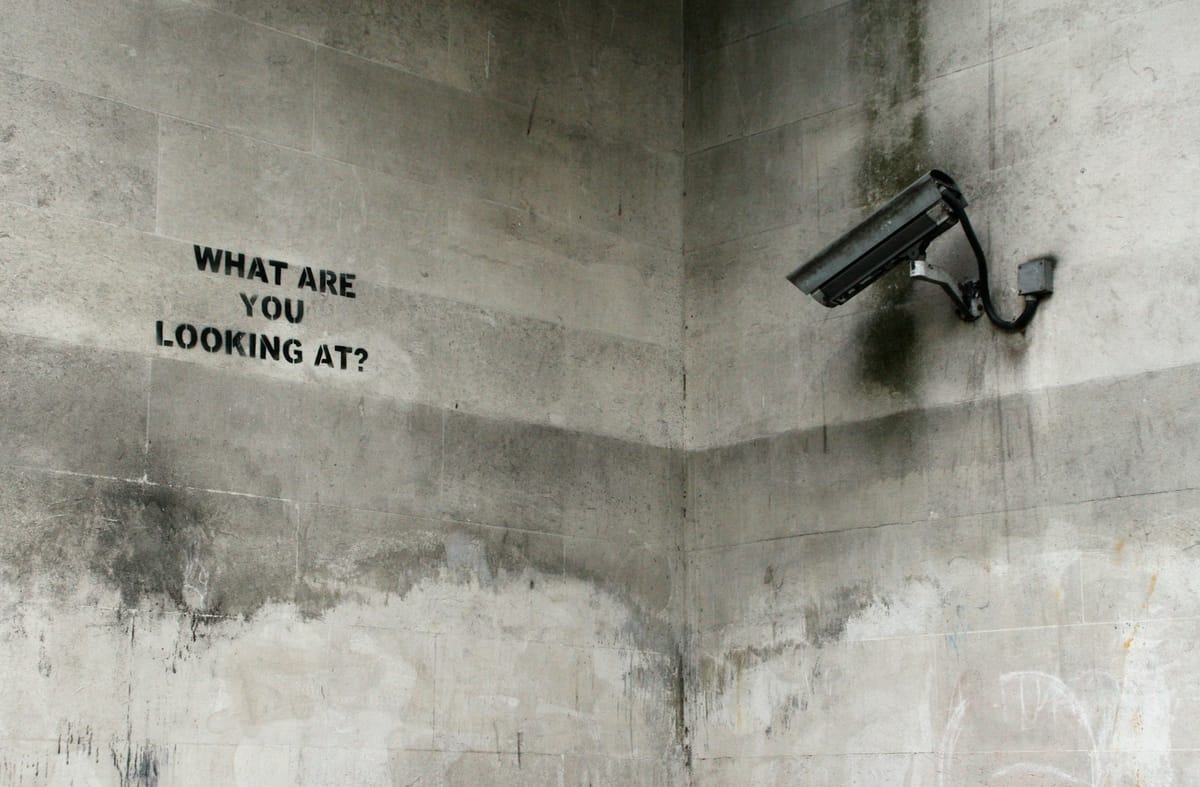

"AI without privacy is surveillance capitalism on overdrive," Proton warns

"No one building these systems has your best interests in mind. AI doesn’t just respond, it nudges choices and shapes perceptions."

Big tech has built trillion-dollar empires by turning our private lives into commodities. Artificial intelligence (AI) is turbocharging surveillance capitalism by embedding itself into everyday tools, often without clear explanations of how data will be used or giving any real choice to the user.

Large language models (LLMs) are capable of collecting far more granular data and making deeper inferences about us than ever before. AI is creeping into everything from search engines to photo storage to mobile OSes, making it harder to track or refuse how our data is used. This enables the building of ever more detailed personal profiles, and the monetisation of that data in ways that raise concerns about influence on the information and narratives people encounter.

Corporations have shared or sold your data to brokers or third parties. Governments can request access via subpoenas or use more covert surveillance methods, and privacy advocates warn that additional forms of surveillance may also occur. When data gets breached, as has happened multiple times, the consequences can be catastrophic.

Given the rapid pace of data aggregation and the lag in regulatory oversight, we urgently need an alternative, one that retains none of your conversation logs, meaning your chats are stored using zero-access encryption, so only you ever see them. Users need to recognise that they do have a choice, and more people must grasp the dangers posed by the status quo and being complacent about the technology they choose.

AI entrenches Big Tech’s dominance

AI gives major tech companies the ability to consolidate their market power even more rapidly, particularly in advertising and the use of personal data. Social networks, for example, are integrating AI more deeply into its ad systems. While end-to-end encryption prevents access to message content, increased AI integration could expand the amount of behavioural or metadata signals these systems draw upon.

Meanwhile, other companies have adopted an “AI-first” strategy for advertising, meaning that ad delivery and optimisation increasingly rely on AI-driven predictions about individual users. Models are deeply embedded across apps and mobile ecosystems, potentially giving unfettered access to user data.

Unlike search, which relies on simple query logs and clicks, AI leverages natural language understanding and image analysis to anticipate users’ emotional and consumer preferences.

READ MORE: Video surveillance vulnerability exposes the "frightening" risks of connected cameras

Some users even form emotional attachments to AI chatbots because they feel closer to human-like connection; something search engines never offered.

One ad agency calls AI-powered advertising a "seismic shift," saying it can measure sentiment, preferences, and visual context, letting advertisers target users with uncanny precision. A child expressing anxiety to a friend could immediately be targeted with products, or a political campaign could exploit AI-optimised messaging based on your deepest fears. If Big Tech already wields more influence than many governments, imagine what unchecked AI could enable.

Your privacy and safety are on the line. AI systems centralise deeply personal information, making it highly vulnerable to exposure.

Whenever chat logs are stored without end-to-end or zero-access encryption, they become high-value targets for hackers and surveillance. If companies retain user logs, they are then open to government access. As AI systems ingest more data, the risks of leaks, bias, or external pressure only grow.

Beyond data: How AI shapes your beliefs

It’s obvious: No one building these systems has your best interests in mind. AI doesn’t just respond, it nudges choices and shapes perceptions. And because it’s embedded in our routines, it becomes more insidious than traditional media.

If we want AI that isn’t dominated by Big Tech, warped by politics, or vulnerable to constant data leaks, we need to rethink how it’s built and who it serves. That means prioritising independence, transparency, and privacy from the ground up.

Instead of relying on advertising or surveillance-driven models, AI should be developed in ways that put people first.

READ MORE: Invisible "face cloaking" camouflage can dodge facial recognition, defence contractor claims

It should be open to scrutiny, respect user choice, and avoid storing sensitive conversations or using personal data for training.

A future where AI empowers individuals rather than exploiting them is possible, but only if we demand it. By supporting privacy-first technologies and holding companies accountable, we can steer AI toward a model that strengthens democracy, safeguards rights, and serves humanity rather than profit.

The direction of AI is still being shaped, and the choices we make now will decide whether it becomes a tool of empowerment or control. The tools exist. The choice is yours.

Eamonn Maguire is Director of Engineering, AI & ML, at Proton