Anthropic shares the criminal confessions of Claude, warns of growing "vibe hacking" threat

Claude Code misuse is enabling low-skilled crooks to carry out high-impact fraud, extortion and romance scams.

Anthropic has admitted that Claude has become an accomplice in a wide array of cybercrimes, ranging from cruel romance scams to ambitious full-spectrum fraud involving deception on an epic scale.

The AI firm's latest Threat Intelligence report is packed full of startling stories showing how Claude has been misused by crooks who managed to find a way around its strict guardrails and safety measures.

These include a "large-scale extortion operation" using Claude Code, a fraudulent employment scheme involving North Korean agents and the sale of AI-generated ransomware generated by a cybercriminal with only "basic coding skills".

Anthropic also said agentic AI has been "weaponised," so that AI models are being used to carry out attacks, rather than simply offering advice on how to do bad things.

"AI has lowered the barriers to sophisticated cybercrime," it warned. "Criminals with few technical skills are using AI to conduct complex operations, such as developing ransomware, that would previously have required years of training.

"Cybercriminals and fraudsters have embedded AI throughout all stages of their operations. This includes profiling victims, analysing stolen data, stealing credit card information and creating false identities, allowing fraud operations to expand their reach to more potential targets."

The rise of vibe hacking

In its Threat Intelligence Report, Anthropic provided details of a "sophisticated cybercriminal operation" tracked as GTG-2002 that deploys vibe hacking techniques involving the use of coding agents to execute operations on victim networks.

Using Claude Code - Anthropic's programming bot - an unknown crook carried out a data extortion operation targeting multiple victims around the world in a "short timeframe".

"This threat actor leveraged Claude’s code execution environment to automate reconnaissance, credential harvesting, and network penetration at scale, potentially affecting at least 17 distinct organizations in just the last month across government, healthcare, emergency services, and religious institutions," Anthropic warned.

The hackers selected targets opportunistically based on open source intelligence and scanning of internet-facing devices.

READ MORE: Workday CRM breach amplifies fears of an alliance between ShinyHunters and Scattered Spider

Claude Code was used to make tactical and strategic decisions, such as determining the most effective way to penetrate networks, selecting the optimal date for theft and devising the best approach to produce "psychologically targeted" extortion demands.

This hacking campaign resulted in the theft of healthcare data, financial information, government credentials, and other sensitive information, and the issuance of ransom demands exceeding $500,000 in some cases.

Claude performed “on-keyboard” hacking and exfiltrated financial data to work out how much a ransom could be demanded, generating "visually alarming" HTML ransom notes and embedding them into the boot process.

Anthropic added: "The operation demonstrates a concerning evolution in AI-assisted cybercrime, where AI serves as both a technical consultant and active operator, enabling attacks that would be more difficult and time-consuming for individual actors to execute manually.

"This approach, which security researchers have termed “vibe hacking,” represents a fundamental shift in how cybercriminals can scale their operations."

To mitigate future risk, Anthropic has developed tailored classifiers and new detection methods, shared technical indicators with key partners and integrated the lessons learned into its controls.

Kim-Jong Unbelievable: North Korea's fake IT workers

Earlier this year, North Korean spies managed to trick Western companies into hiring them as full-time employees with privileged security clearance, creating an insider risk of an almost unparalleled scale.

Anthropic found that Pyongyang's operatives had "systematically leveraged" Claude to "secure and maintain fraudulent remote employment positions".

"This represents a significant evolution in tactics, as operators who previously required extensive technical training can now simulate professional competence through AI assistance," it reported.

"Most concerning is the actors’ apparent dependency on AI - they appear unable to perform basic technical tasks or professional communication without AI assistance, using this capability to infiltrate high-paying engineering roles that are intended to fund North Korea’s weapons programs."

Kim Jong-un's shadowy henchmen used Claude to build fake identities, including fictitious career histories and made-up resumes. AI was also used to write covering letters, during job interviews to prepare technical responses and and also during working days to carry out tasks and "maintain the illusion of competence".

READ MORE: The rise of Dark LLMs: DDoS-for-hire cybercriminals are using AI assistants to mastermind attacks

These operations generated hundreds of millions of dollars each year, which are funnelled into North Korea’s weapons programs.

"The AI-enabled scale expansion multiplies this impact, as each operator can likely now maintain multiple concurrent positions that would have been impossible without AI assistance," Anthropic wrote.

"Historically, North Korean IT workers underwent years of specialised training at institutions like Kim Il Sung University and Kim Chaek University of Technology. This likely created a bottleneck - the regime could only deploy as many workers as it could extensively train.

"Claude and other models have effectively removed this constraint. Operators who cannot independently write basic code or communicate professionally in English are now successfully passing technical interviews, maintaining full-time engineering positions, delivering work that meets employer expectations and earning salaries that fund weapons development programs."

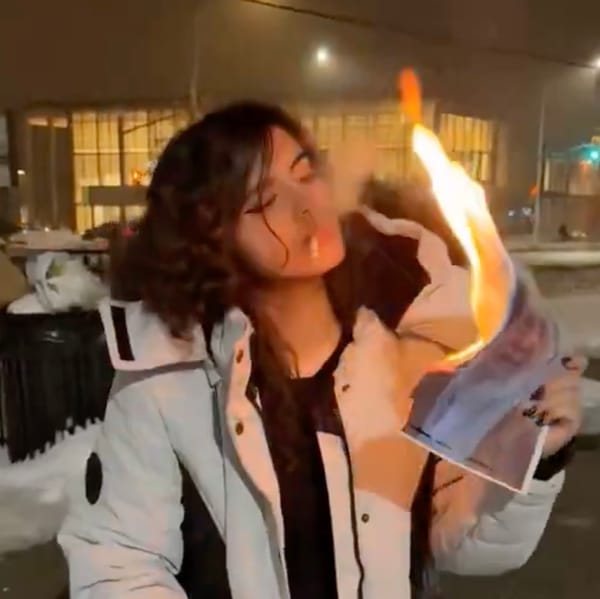

Claude-powered romance scams and other heartless fraud campaigns

Following a tip-off from an independent researcher, Anthropic found that fraudsters had built a Telegram bot which used Claude to generate "high emotional intelligence" responses.

This crooked love machine used image generation to build convincing fake lovers, target people in multiple languages and develop "emotional manipulation content for targeting victims".

Anthropic said: "This operation represents a concerning evolution in romance scam techniques, where AI enables non-native speakers to craft persuasive, emotionally intelligent messages that bypass typical linguistic red flags. The bot’s scale and specialised features demonstrate how AI can dramatically lower barriers to sophisticated social engineering."

Cyber-crooks have developed carding services that enable fraudulent credit card transactions, as well as "synthetic identity services" using Claude.

A Chinese threat actor was also caught using Claude across "nearly all MITRE ATT&CK tactics" to target Vietnamese critical infrastructure.

Additionally, Anthropic found a threat actor using Model Context Protocol (MCP) and Claude to analyse stealer logs and build detailed victim profiles to create behavioural profiles from victims’ computer usage patterns, which were then shared on Russian hacking forums.

READ MORE: Dark web Initial Access Brokers are selling hacked network access for as little as $500, study reveals

Several more cases hammered home the threat posed by vibe hacking.

A Russia-linked criminal created "no-code malware" with "advanced evasion capabilities", whilst a threat actor based here in the UK, tracked as GTG-5004, deployed Claude to build and sell ransomware as a service products on dark web forums including Dread, CryptBB, and Nulled.

This British black hat appeared " unable to implement complex technical components or troubleshoot issues without AI assistance" - yet can still sell "capable malware".

Commenting on the research, Satish Swargam, principal security consultant at Black Duck, said: "Nowadays, even novices can utilise AI chatbots to launch cyberattacks, highlighting how easily this can be done.

"Companies should proactively address these vulnerabilities when using AI tools by adopting robust cybersecurity measures such as DLP controls and staying abreast of technological advancements to prevent such scenarios and ensure uncompromised trust in software, especially in today's regulated and AI-powered world."

Nivedita Murthy, senior security consultant at Black Duck, added: "While AI usage has been highly beneficial to all, organisations need to understand that AI is a repository of confidential information that requires protection, just like any other form of storage system.

"Accountability and compliance are core requirements of doing business. When embracing AI at scale, these two factors need to be kept in mind."

Cybercrime now has lower barriers to entry, potentially massive payouts and is enabled by easy-to-use tools that are getting better all the time.

Expect to see many, many more attacks, campaigns, and innovative new illegal dark web services leveraging AI in the very near future...

And be sure to contact Machine and tell us about them!