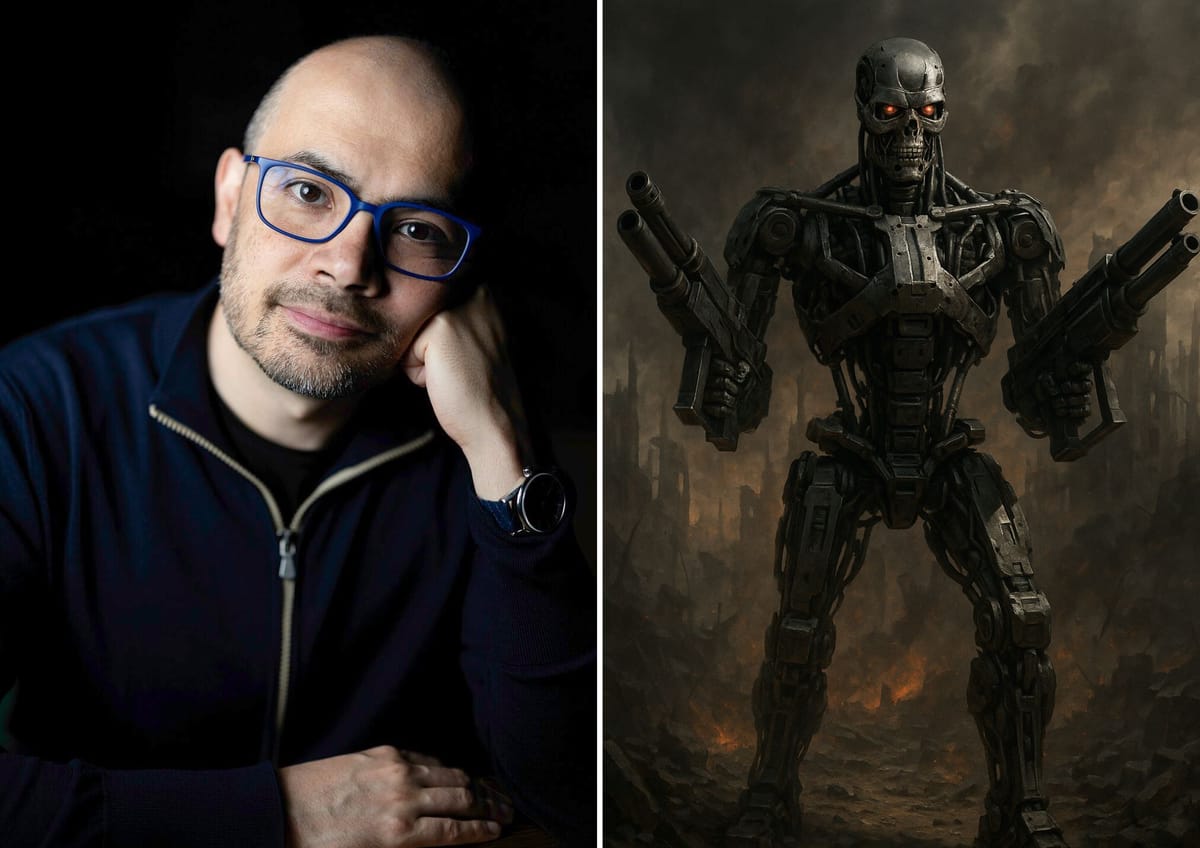

"It's pretty sobering": Google Deepmind boss Demis Hassabis reveals his p(doom)

AI leader speaks out to discuss the risk that humanity will be wiped out by its own creations.

Every modern generation has lived with its own unique existential fear, from the nuclear annihilation worries of the mid-20th century to more recent worries that climate change will render our fragile planet uninhabitable.

Here at Machine, the existential risk we are most interested in is p(doom) - the likelihood that AI will turn on its creators, go full Terminator and wipe us off the face of the Earth.

Doomer-in-chief Elon Musk recently set his p(doom) at somewhere between 10% and 20% within the next 10 years. Which sounds grim, obviously, but it means our survival rate could be as high as 90%.

Now Google Deepmind CEO Sir Demis Hassabis has delivered a rather more nuanced, cautious take on the question of x-risk.

Hassabis, a British AI researcher and entrepreneur, co-founded Google DeepMind and Isomorphic Labs, now serving as a UK Government AI adviser. In 2024, he and John Jumper won the Nobel Prize in Chemistry for using AI to predict protein structures. He was made a CBE in 2017, knighted in 2024 and named to the Time 100 list twice.

So there should be no better person to revealed the brutal truth about whether Skynet will kill us all or if we're getting a wee bit too excited about technology that's basically a clever autocomplete for remixing the corpus of human knowledge.

The politics of p(doom)

Unfortunately, Hassabis is not about to tell humanity that it's doomed. Neither has unequivocally stated that we're on the verge of implementing utopian fully automated luxury communism (which is a vanishingly unlikely scenario anyway because elites don't have a great history of sharing their wealth for the rest of us).

In an interview with the podcaster Lex Fridman, Hassabis said: "You know, I don’t have a p(doom) number because I think it would imply a level of precision that is not there.

"I don’t know how people are getting their p(doom) numbers. I think it’s a little bit of a ridiculous notion. What I would say is it’s definitely non-zero and it’s probably non-negligible. So that in itself is pretty sobering."

Hassabis admitted that the future of AI development is "hugely uncertain". We don't know if a fast takeoff is imminent - an intelligence explosion powered by models capable of learning independently through recursive self-improvement.

READ MORE: "Superintelligence and a new era of personal empowerment": The AI gospel according to Mark Zuckerberg

He also said we have no idea "how controllable" AGI or superintelligence is going to be and whether engineers will encounter "really hard problems that are harder than we guess today."

"On the one hand, we could solve all diseases, energy problems, the scarcity problem and then travel to the stars," he said. "That's maximum human flourishing. On the other hand, is this sort of p(doom) scenario. So, given the uncertainty around it and the importance of it, it’s clear to me that the only rational, sensible approach is to proceed with cautious optimism."

The Deepmind supremo called for "ten times" more effort to understand AI safety risk as we get "closer and closer" to AGI.

He added: "I think it could be amazingly transformative for good. But on the other hand, there are risks that we know are there, but we can’t quite quantify. So the best thing to do is to use the scientific method to do more research to try and more precisely define those risks, and of course, address them."

Should we be more worried about AGI or evil humans?

Hassabis was also asked whether he was more worried about the risk posed by AGI or human misuse of AI technology.

"I think they operate over different timescales and they’re equally important to address," he said. "So there’s just the common garden-variety of bad actors using new technology, in this case, general-purpose technology and repurposing it for harmful ends. That’s a huge risk."

He continued: "How does one restrict bad actors' access to these powerful systems, whether they’re individuals or even rogue states, but enable access at the same time to good actors to maximally build on top of? It’s a pretty tricky problem that I’ve not heard a clear solution to."

READ MORE: Adapting to Digital Darwinism: Will AI create a permanent new class divide?

Hassabis then hopped off the fence to reveal what he's more concerned about people or machines, but stopped short of revealing whether our species' demise is around the corner.

He added: "Maybe my mind is limited, but I worry more about the humans - the bad actors."

"We can maybe also use the technology itself to help with early warning on some of the bad actor use cases, right? Whether that’s bio or nuclear or whatever - AI could be potentially helpful there as long as the AI you’re using is itself reliable.

"It’s an interlocking problem and that’s what makes it very tricky. It may require some agreement internationally, at least between China and the US on some basic standards."

Roots of the apocalypse

The term p(doom) emerged from Silicon Valley’s tight-knit AI research community as a grim estimate of the likelihood that AGI could lead to human extinction. It became a kind of insider slang - half-serious, half-terrifying - among engineers, founders, and AI theorists wrestling with the double-edged power of their creations.

While the idea of superintelligent AI posing a risk has been around for decades (most notably in the work of Nick Bostrom and Eliezer Yudkowsky), the actual probability label of p(doom) gained traction as large language models started to hint and a grim potential future.

For critics, p(doom) is alarmist techno-paranoia. But for doomers, it’s a sober analysis of potentially unprecedented power of AI.

READ MORE: ChatGPT Agent excels at finding ways to "cause most harm with least effort", OpenAI reveals

Several researchers have put the likelihood of human annihilation at above 90%, including:

Roman Yampolskiy: Latvian computer scientist, p(doom) 99.9%.

Eliezer Yudkowsky: Founder of the Machine Intelligence Research Institute, p(doom) >95%.

Max Tegmark: Swedish-American physicist and co-founder of the Future of Life Institute, p(doom) >90%.

Connor Leahy: German-American AI researcher and co-founder of EleutherAI, p(doom) 90%.

Don't have nightmares!