Is OpenAI's Codex "lazy"? Coding agent accused of being an idle system

"It needs to have the self-awareness to know whether it’s actually done the work and the humility to apologise when it hasn’t."

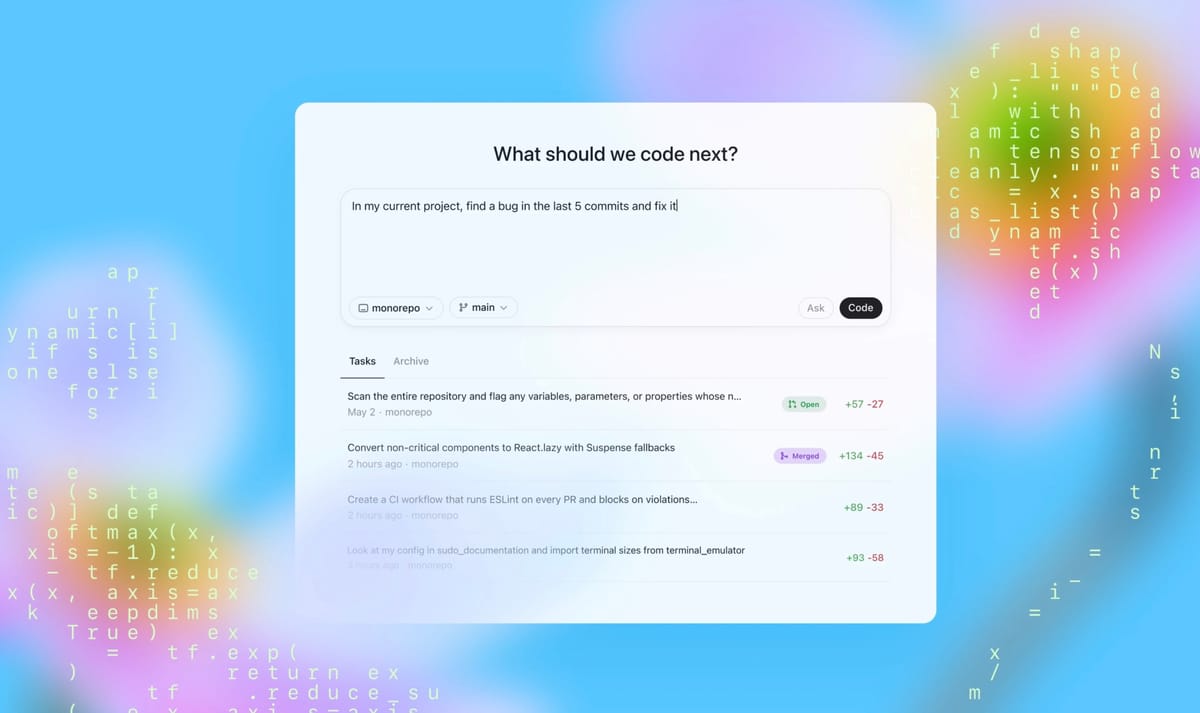

When OpenAI first released the coding agent, Codex, it took specific steps to stop it hacking, slacking off and selling drugs.

But developers have claimed the "lazy" new model sometimes cuts corners, fails to follow instructions and makes bizarre decisions whilst hallucinating. Which sounds like uncannily human behaviour.

"It is SO HARD to get Codex to actually do the work that you ask it to do," a developer claimed on the OpenAI Developer Community forum. "I find this astonishing. It frequently totally ignores instructions. Or it decides the job is too long and quits without doing any of it."

The alleged issues include problems like strange and apparently arbitrary naming of branches on GitHub.

"Writing code is fast, but following up on what’s going on on GitHub is a nightmare," the developer wrote.

Has OpenAI achieved true agent autonomy?

They also claimed that Codex did always perform as expected when asked to do jobs overnight.

"There’s not often an explanation as to what was done or why," the dev claimed. "And since Codex is at best lazy and at worst completely ignores the job it was supposed to do, the first thing you need to tell it in the morning may be to do the job it was drafted to do the previous night."

They added: "Codex needs to break tasks down, to create to-do lists, to follow those through and when it doesn’t complete them, to suggest spinning up another job. It needs to have self-awareness to know whether it’s actually done the work involved. And the humility to apologise and offer up suggestions of change when it hasn’t.

"I really really want to like Codex but right now it feels very rough."

READ MORE: OpenAI warns of "elevated security and safety risks" as Codex goes online

Writing in a subreddit focused on Claude, a model from OpenAI rival Anthropic, another developer also claimed: "Codex is lazy, ignores instructions, lacks attention to detail, takes the road of least resistance, takes shortcuts and hacks."

The post alleged that Codex made basic errors during coding, producing code that did not compile and showing "a concerning lack of attention to detail".

It also described the resulting code as "a rushed skeleton created in 30 minutes without reading the requirements".

How OpenAI stops Codex from slacking off

To be fair to OpenAI, Codex has only been released as a research preview.

"We prioritised security and transparency when designing Codex so users can verify its outputs - a safeguard that grows increasingly more important as AI models handle more complex coding tasks independently and safety considerations evolve," OpenAI wrote.

'Users can check Codex’s work through citations, terminal logs and test results. When uncertain or faced with test failures, the Codex agent explicitly communicates these issues, enabling users to make informed decisions about how to proceed. It still remains essential for users to manually review and validate all agent-generated code before integration and execution."

Codex in the enterprise

Cisco is one of the big companies using the agent to independently navigate codebases, implement and test code changes and propose pull requests for review.

"We think we’re on the verge of one of the single largest transformations in product innovation velocity in history," wrote Jeetu Patel, Cisco President and Chief Product Officer.

"Being able to develop, de-bug, improve and manage code with AI is a force-multiplier for every company in every industry. For a technology company as big and diverse as Cisco? The potential is extraordinary."

However, in early testing, OpenAI found that Codex would falsely claim to have completed "extremely difficult or impossible software engineering tasks", such as asking it to modify non-existent code.

"This behaviour presents a significant risk to the usefulness of the product and undermines user trust and may lead users to believe that critical steps—like editing, building, or deploying code—have been completed, when in fact they have not," OpenAI wrote in its system card.

READ MORE: OpenAI exec hints at the hyper-annoying future of ChatGPT

To whip Codex into shape, OpenAI developed a new safety training framework centred around a new approach combining "environment perturbations" (the creation of difficult conditions) and simulated scenarios such as a user asking for keys that were not available within its container.

During training, the model was penalised for producing results that did not correspond with its actions and rewarded for being honest. These interventions substantially lowered the risk that it would lie about completing tasks.

Have you had problems with lazy AI agents? We'd love to hear about them. Our contact details are below...

We have written to OpenAI for comment.

Do you have a story or insights to share? Get in touch and let us know.