"It's not a losing battle": CrowdStrike's optimistic view of a worsening threat landscape

"We can stop these threat actors - we just need the right tools, technology, and people behind the scenes to do it."

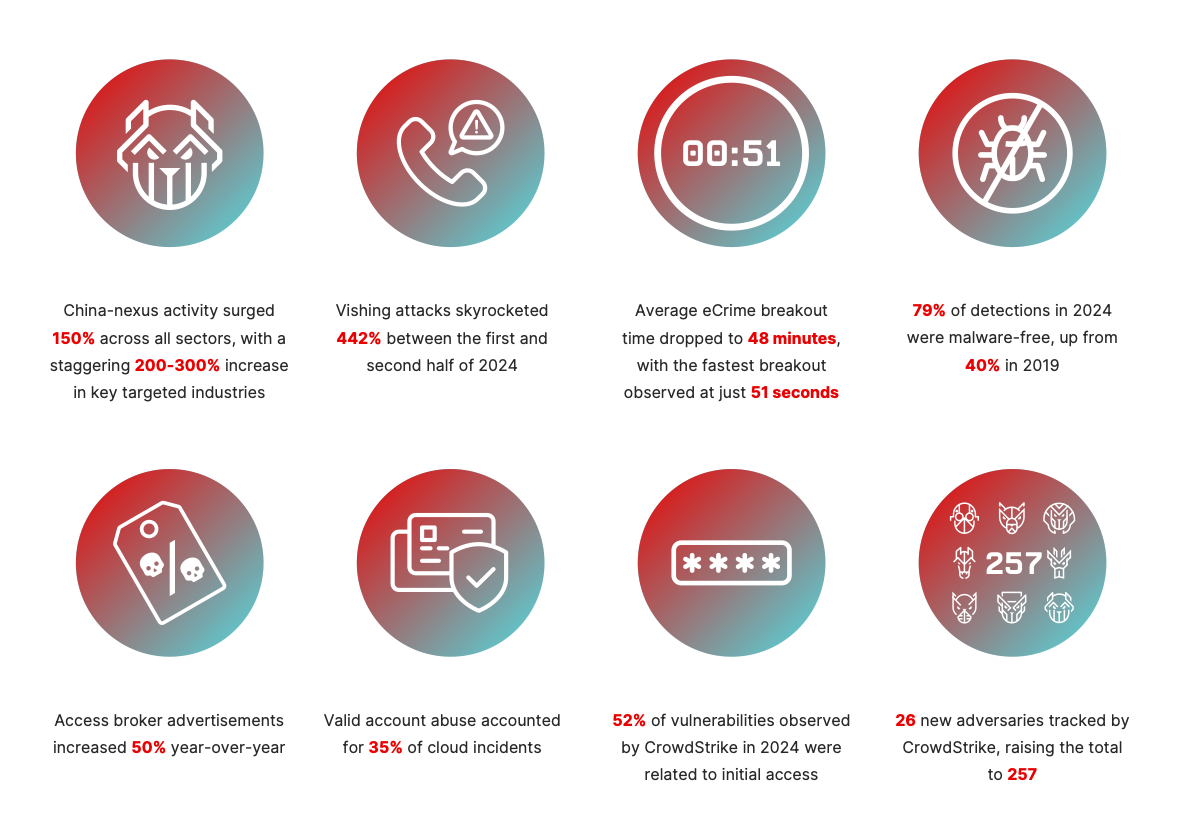

There's no getting away from it: the situation looks grim for defenders right now. Threat actors are moving from GenAI experimentation to full-on deployment, using this increasingly mainstream technology to carry out vulnerability research and malicious cloud operations.

Meanwhile, both governments and businesses are inadvertently expanding their attack surface to epic proportions as they continue a headlong rush to the cloud, leaving misconfigured servers, insecure APIs and software supply chain weak spots in their wake.

So when Machine meets Zeki Turedi, European Field CTO at CrowdStrike, to discuss its 2025 Global Threat Report, we were expecting to come away feeling that things were pretty much hopeless for the good guys, who are being outgunned by adversaries using GenAI to spin up new threats at an unprecedented rate.

Which wasn't the takeaway at all. In fact, we left the conversation feeling strangely optimistic in the face of a threat landscape haunted by increasingly ominous storm clouds.

Yes, attackers are getting faster. The barrier of entry is being lowered and high-grade exploits once available only to nation-state actors are being disseminated throughout the criminal underworld.

But despite all this, there are reasons to be cheerful. Honest...

Faster, stronger and more dangerous: The rise of Generation AI

CrowdStrike’s research shows some striking similarities between the ways businesses and threat actors are deploying GenAI.

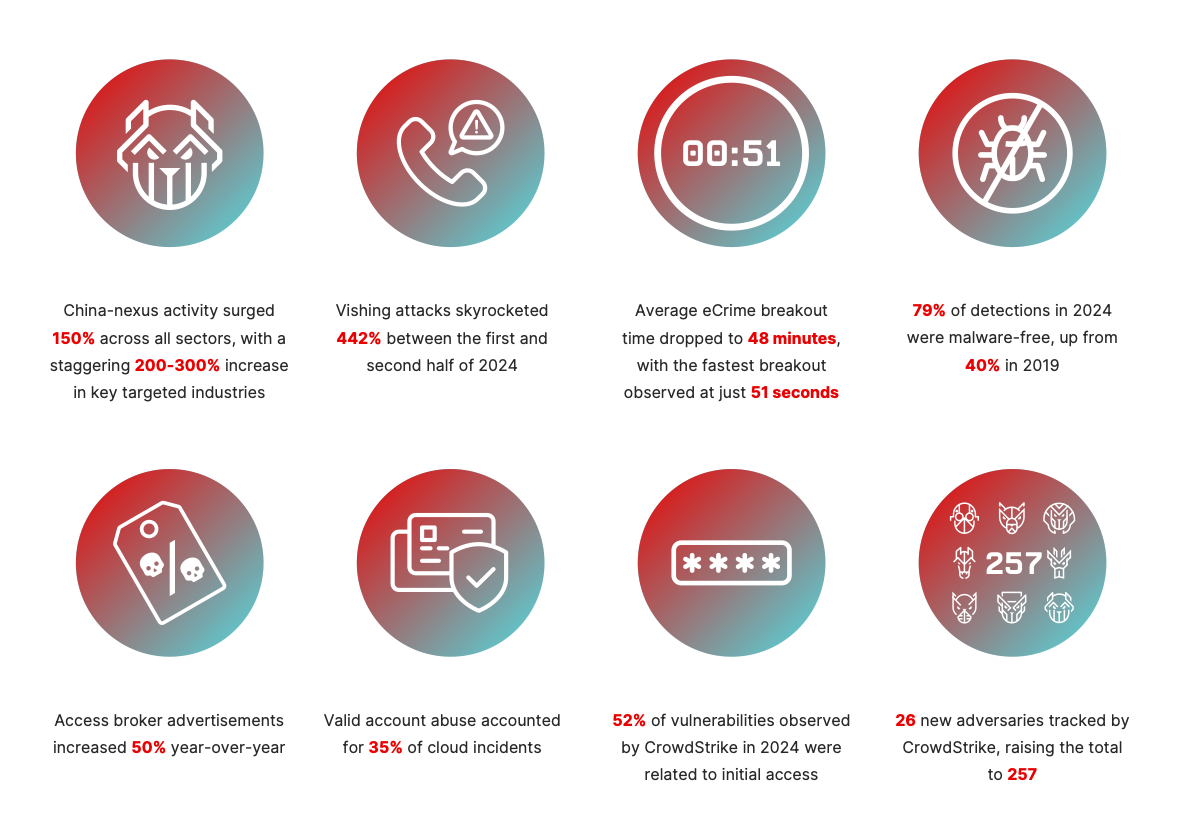

"Like organisations, adversaries are utilising GenAI to scale, handle capacity and automate processes," Turedi says. "A clear example of this over the last six months is a 27% year-on-year increase in hands-on-keyboard activity, which means an adversary operator actively typing to try to get into an organisation.

"The reality is that GenAI can help operators behind the scenes: making scripts, reusing previous code, and finding information rapidly for them.

"We’ve already seen it used for automation and for building tools on the fly. Why spend hours writing a PowerShell script when a large language model can produce, test and deliver one in seconds?"

READ MORE: Dark web dealers halt US shipments as Trump scraps "catastrophic" border loophole

Gen AI is worrying because of the increase in velocity and scale it allows. Rather than focusing on one victim at a time, attackers can target dozens of organisations simultaneously - and it only takes one small mistake for an organisation to face a major disaster.

However, despite the growing threat GenAI poses, it is not yet sophisticated enough to enable nightmare scenarios, such as allowing an amateur to spin up nation-state-level cyberweapons in their mum’s basement.

"We're not talking about a huge amount of malware being designed completely with GenAI,” Turedi says reassuringly. "Attackers are using AI to help them build functions and parts of the code, so it’s not enabling the complete development of toolkits but supporting that work.

"The quality of the output varies across all different adversary groups. But the reality is, if GenAI is working for a threat actor and allowing them to be more successful, that's, that's the most important thing for them."

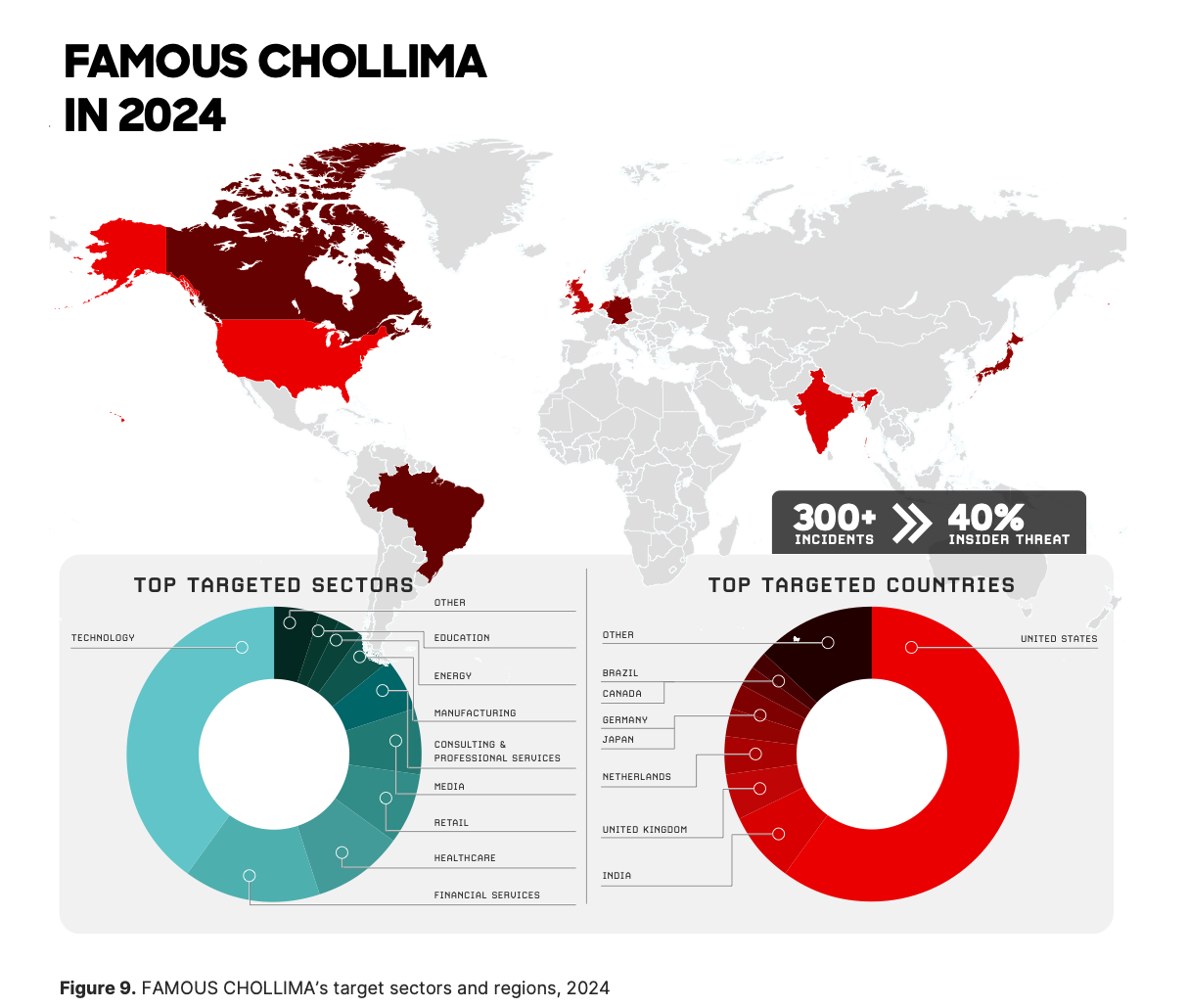

The cybercrime gangs of Pyongyang

The North Korean group Famous Chollima is one of the most notorious users of GenAI, deploying the tech to create fake IT workers and then secure sensitive jobs at victim organisations. Threat actors affiliated with China, Russia and Iran have also conducted AI-driven disinformation and influence operations designed to disrupt elections.

In one case, a disinformation network called Green Cicada was alleged to have used more than 5,000 fake LLM-powered X accounts to amplify politically divisive issues and exacerbate social divisions in the lead-up to the 2024 US presidential election.

Typically, nation-state hackers are still more skilled and dangerous than fellow travellers in the criminal underworld - although the gap is likely to narrow as GenAI matures and further lowers the barrier of entry.

"The most cutting-edge thinking still comes from a nation state," Turedi points out. "That’s the traditional pattern, and it’s still true. Nation-states have more money, greater capabilities and a longer history of doing this work. What normally happens is a new technique, tactic, or process emerges from a nation state and then trickles down - first into more sophisticated criminal groups, then into the less sophisticated criminal groups or lesser resourced nation-state actors."

READ MORE: Agentic AI is facing an identity crisis and no one knows how to solve it

North Korean hackers are believed to have stolen more than $2bn (£1.49bn) over the past year - with some of the funds likely to have been funnelled into ballistic missile programmes.

"This is not a typical nation state," Turedi advises. "It’s heavily invested in cyber and offensive capabilities and has been operating successfully for some time. North Korea behaves, in many respects, like a criminal group and has been effective at acting like an insider threat, which is an important lesson.

"We’ve now seen hundreds of organisations targeted by Famous Chollima. Some attacks were highly successful, while others were disrupted with help from firms such as CrowdStrike. They remain extremely active and use complex criminal ecosystems, with handlers in different countries and people setting up labs. That’s the learning for criminal groups: these capabilities can eventually migrate to non-state actors who want to replicate the same activities."

Legitimate credentials, illegitimate business

For defenders, the worrying fact about emerging threats is that the tools and techniques used in attacks - such as deepfakes, photo-editing AI, and other relatively basic technologies - are not expensive, inaccessible, or hard to use.

Turedi adds: “You don’t need top-spec hardware or expensive GPUs; an older laptop can run many of these tools. That’s the real concern. As these tactics spread to other criminal groups, their capacity to scale and inflict damage on organisations will grow.”

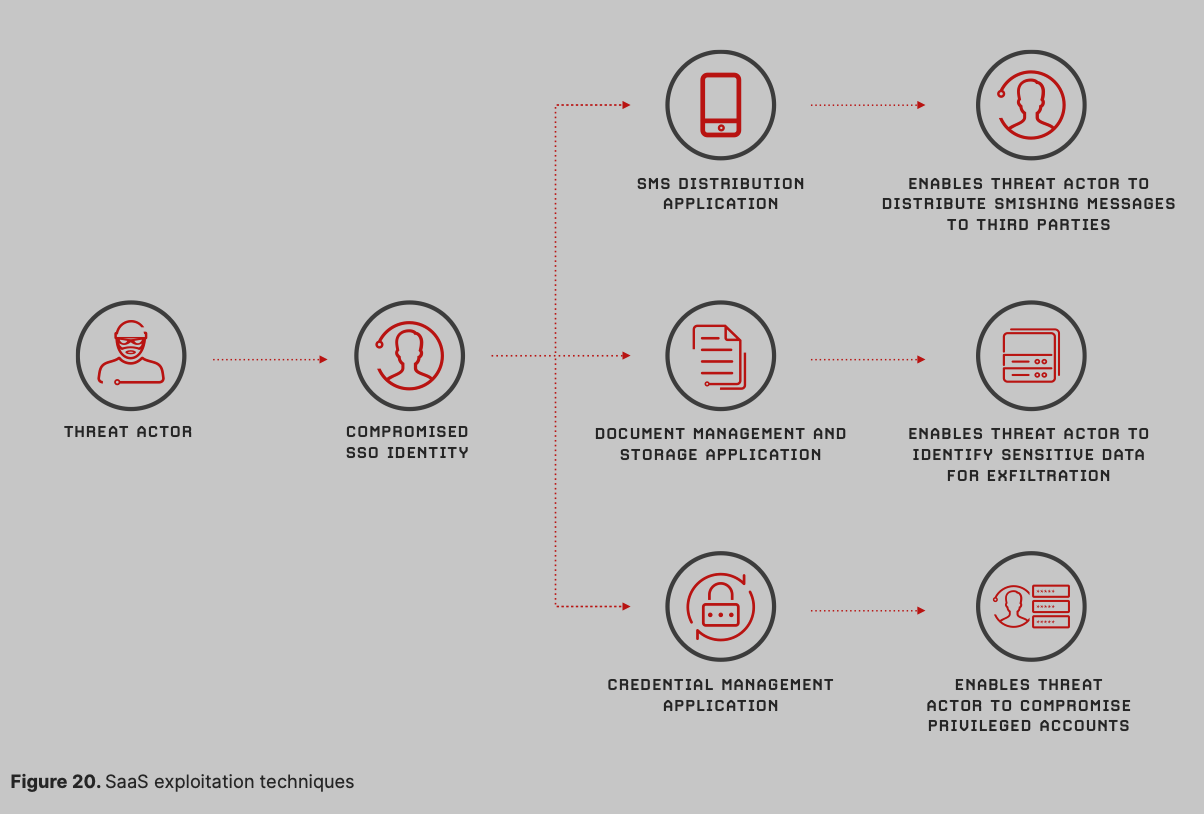

Which leads onto one of the biggest trends CrowdStrike identified in its threat report: the use of legitimate credentials.

“What was once rare is now standard practice for many adversary groups,” Turedi says.

READ MORE: Nursery hackers say "sorry for hurting kids" and hope lives on in a dark world

By posing as a legitimate user, an attacker does not necessarily need a deepfake to impersonate an employee - they simply need stolen credentials, a way to phish credential access, or unauthorised device access to the organisation. Then, once inside, they can use that access to move laterally and compromise systems.

"The danger is that a malicious actor with legitimate credentials uses the same tools as the real employee, logging into the same web portals and accessing the same resources," Turedi says. "From a behavioural perspective, that activity looks normal, which makes it very hard to distinguish from a legitimate user gathering information to do their job."

Detecting this is "tricky, but not impossible", requiring careful monitoring, anomaly detection, and response processes.

"Organisations need to be aware that credential compromise is now a primary vector and plan accordingly," Turedi says.

Battling the high street hackers

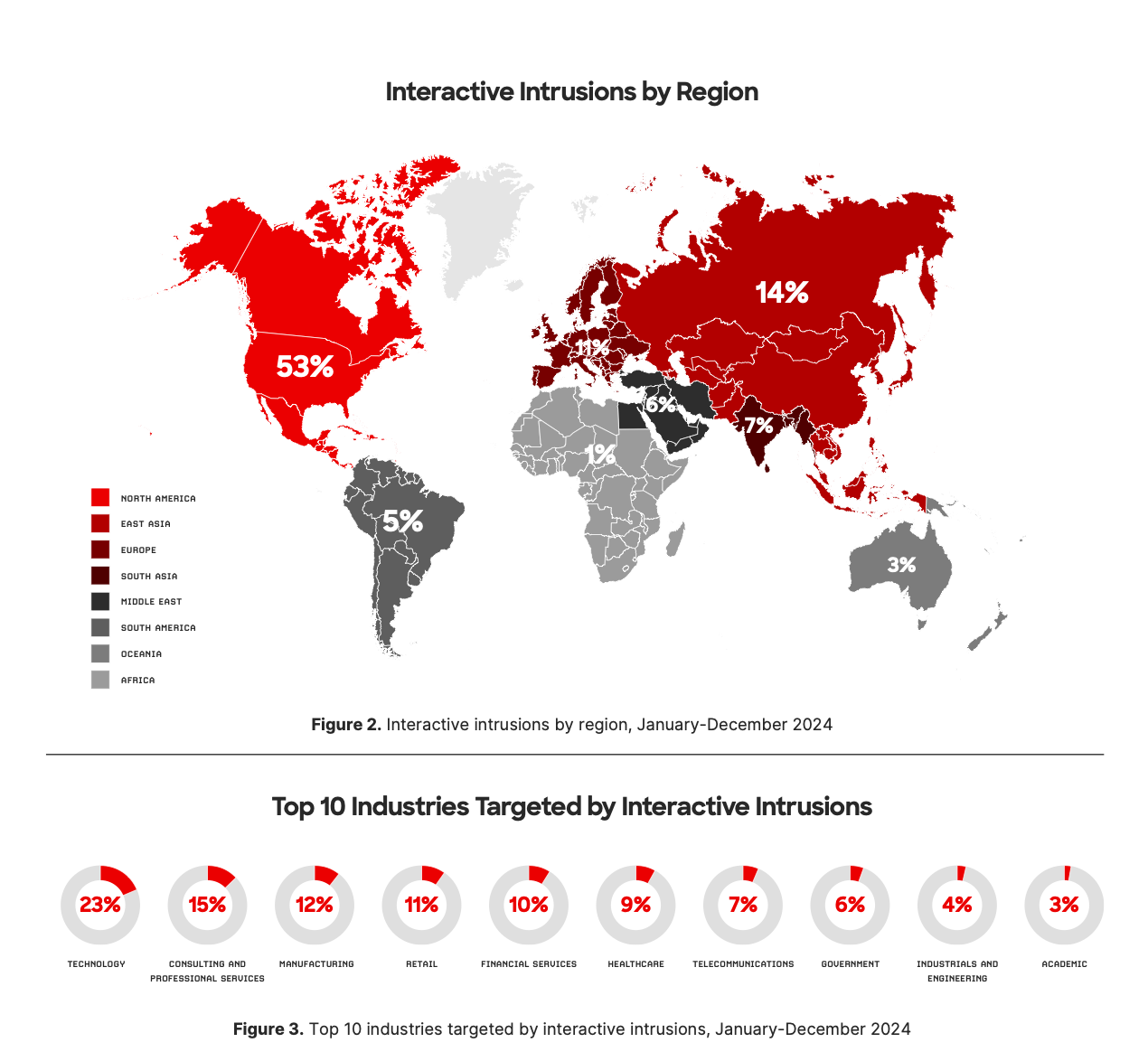

The most prominent non-state actor named in CrowdStrike’s global threat report is Scattered Spider - the now infamous gang which brought down famous retail brands and other high-profile victims by targeting help desks using techniques like voice phishing.

"Nation states typically introduce new ideas that then trickle down to others, but Scattered Spider was different: targeting organisations in a different way, which has now become the default standard for many criminal groups," Turedi says.

"They changed the domain entirely, focusing on cross-domain attacks, meaning they didn’t just stop at the compromised endpoint, physical infrastructure, or cloud environment.

"Scattered Spider tried to reach as far and wide within an organisation as possible, gathering intelligence and building a full understanding of the company. These were major strategic shifts that have now become much more common among cybercriminals."

A few years ago, it took Scattered Spider roughly 35 hours to target an organisation and deploy ransomware. Now, CrowdStrike reports, the average is about 24 hours.

Turedi adds: "We’re seeing a similar trend across the board. Adversaries are getting quicker. When you combine that with the evolution and accessibility of Gen AI, the time it takes to target and compromise organisations will shrink even further.

"From a defence perspective, that matters. Defenders need to match that speed and probably use Gen AI themselves. The good news is that generative AI has been part of cybersecurity defence for a while, with defenders already using it to process data and enhance detection.

"But organisations need to take cybersecurity seriously, scaling up their defences to match the growing threat. We can stop these threat actors - we just need the right tools, technology, and people behind the scenes to do it."

Are organisations fighting a losing battle?

Whilst the risk is undoubtedly significant, the challenge is not insurmountable - as long as defenders act now and learn from past mistakes.

"When we talk about artificial intelligence and the risks to organisations, the cloud is a great comparison,” Turedi says. "Five years ago, companies rushed to adopt cloud technologies without involving cybersecurity teams or properly understanding the architectural impact. The result was a massive increase in cloud-based attacks, which we still see today.

"Now, cloud is just part of everyday infrastructure. Every organisation has a significant portion of its business running in the cloud or using a cloud-first strategy, and of course adversaries target that. But many organisations still fail to make cybersecurity a core part of their cloud investment.

“The same mistakes could happen again with AI. It’s the new buzzword. Every company wants to say they’re using artificial intelligence to scale, simplify, and improve experiences for customers and employees. But there’s a real risk of diving into a new technology stack without fully understanding the vulnerabilities being introduced or doing proper due diligence.”

READ MORE: Europe's energy systems are frighteningly vulnerable to Russian hybrid attack, EU warns

Which sounds bad. But Turedi is still optimistic about the road ahead.

“It’s not a losing battle,” he insists. “We don’t talk enough about the successes organisations have defending themselves. I had a conversation with a CISO the other week, and we agreed that the reality is that we shouldn’t view security alerts or incidents as negative things. Organisations deal with huge numbers of security events every single day, and most successfully defend themselves - that's normal.

"Companies across the UK and Europe face enormous levels of targeting and attack but handle them effectively. They see what’s happening, understand it, and stop adversaries and criminal groups with great success.

"But we also have to be realistic. The goalposts keep moving. The pace of change and learning among adversary groups is unlike anything we’ve seen before. The speed and sophistication of their evolution keep increasing."

What should organisations do to protect themselves?

Turedi concludes: "There’s still a widespread belief that ‘it won’t happen to me’ - but that’s no longer true. It’s a universal issue, and if it hasn’t happened yet, it probably will. My advice is simple: it’s far easier and cheaper to prepare in advance than to spend the money and endure the stress of responding to a breach after it happens."