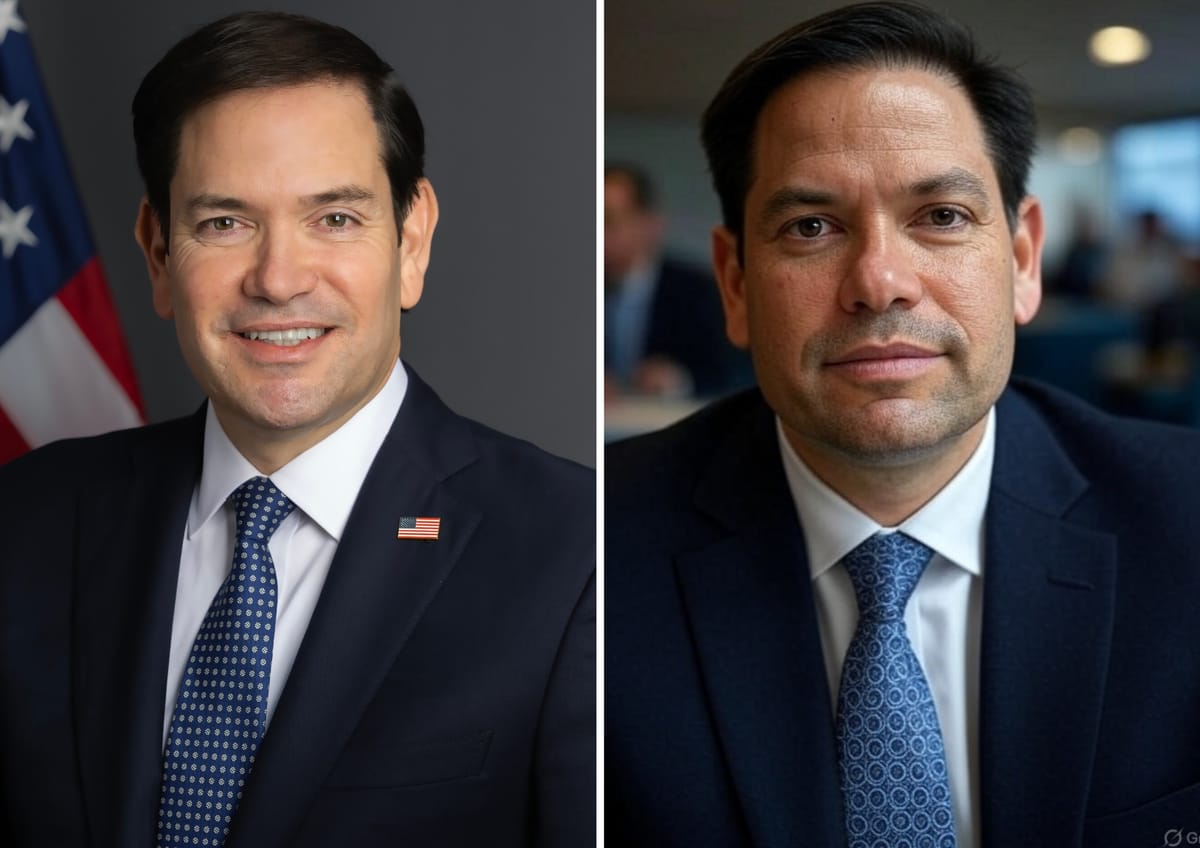

Marco Rubio was AI cloned: How can you avoid the same fate and stay safe from voice spoofing?

Imposter used AI to imitate the voice of the United States Secretary of State, highlighting a growing risk all organisations need to be aware of.

"AI tools that can clone your voice, photo, or create a video of your likeness are now cheap, accessible, and dangerously convincing, fueling a surge in what I call ‘Post-Real’ events."

That's the warning from Pete Nicoletti, Global Field CISO at Check Point Software, in the wake of revelations that an impostor used AI to clone Secretary of State Marco Rubio and then contact three foreign ministers, a US governor and a member of Congress.

An "unknown actor" reportedly created a model capable of impersonating Rubio's voice, then got in touch with officials using the encrypted messaging service Signal.

The incident was disclosed in a State Department cable obtained by CBS News, which allegedly said: "The actor left voicemails on Signal for at least two targeted individuals, and in one instance, sent a text message inviting the individual to communicate on Signal."

It claimed the fake account was created in mid-June and used the display name marco.rubio@state.gov.

"The State Department is aware of this incident and is currently investigating the matter," a State Department spokeswoman said. "The department takes seriously its responsibility to safeguard its information and continuously takes steps to improve the department’s cybersecurity posture to prevent future incidents. For security reasons, and due to our ongoing investigation, we are not in a position to offer further details at this time."

The growing problem with deepfakes

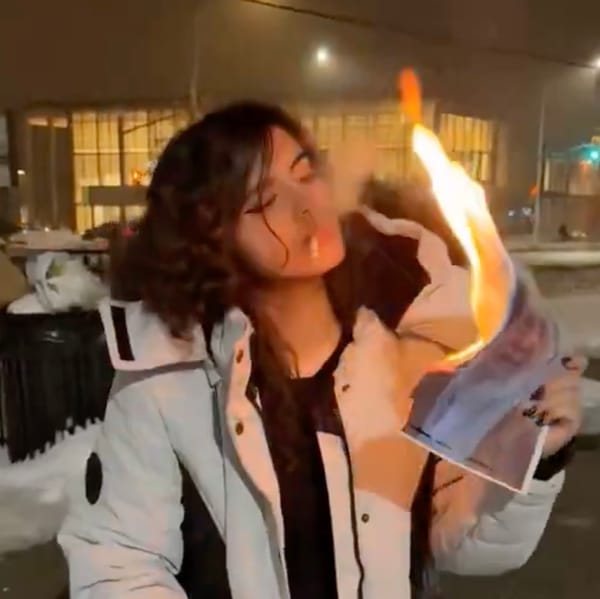

The incident is a grim reminder that AI is making it increasingly difficult for humans to truly trust their perceptions. Earlier this year, crooks tricked a woman into sending them $25 million using deepfake digital impersonations of senior staff.

"Every participant except the CFO was AI-generated," Check Point CISO Pete Nicoletti added. "Politicians are being ‘quoted’ using statements they never made, and even corporations are unknowingly hiring AI-generated job applicants who later access sensitive data.

"This latest deepfake targeting Senator Marco Rubio is just another alarming step in a growing crisis. Another noteworthy detail is that the perpetrator had access to working phone numbers, suggesting a deeper level of preparation and potential data compromise. We can no longer rely on outdated cues, such as six fingers or poor lighting, to spot a fake. AI has evolved!

"Organisations need to implement proactive countermeasures: use pre-agreed code words shared out-of-band before meetings, and during video calls, ask participants to show the back of their head, an area current AI struggles to replicate. Tools like www.facecheck.id can help detect unauthorised use of your likeness, but ultimately, the key defense is educating people to recognise and respond to these threats."

The new face of an old scam

Unfortunately, there is no button that organisations can press to protect their staff from falling victim to AI scams that are increasingly convincing and becoming ever easier to pull off. Phishing always relied on exploiting the age-old human weakness that's the weak underbelly of even the toughest security posture.

Voice and video cloning are similar, targeting the same age-old and possibly unfixable vulnerabilities that inevitably arise whenever humans have access to something bad guys want to steal.

Truman Kain, senior researcher at Huntress, said, "Creating a voice message using a cloned voice is trivial these days, and having AI generate text that impersonates someone’s tone or writing style is even easier.

READ MORE: Kim Jong-unemployable: Exposing fake North Korean tech workers

"Using an app like Signal allows you to set whatever profile name you’d like, but text messages also come with impersonation-related risks, as phone numbers can be spoofed. It’s on the person receiving the message to verify who they’re talking to.

"It won’t be long before voice cloning technology advances to the point that attackers can easily place real-time phone calls (not just voice messages), impersonating the voice of their choice. It’s already possible; it’s just more difficult and lower quality than creating a voice message.

"Scammers have also used voice cloning in grandparent scams, where they send a voice message claiming to be a grandchild who needs money due to a health emergency or legal trouble. The best way to protect yourself against voice cloning scams is to be aware that deepfaked audio clips are quick and easy to create, and to have a passphrase or way to verify that you’re talking to a family member."

The proliferation of AI deepfake technology

The worrying point about AI fraud is that the tools which enable it are easily available, require a relatively low level of skill to use and are getting better at a rapid pace.

Thomas Richards, infrastructure security practice director at Black Duck, told us: "This impersonation is alarming and highlights just how sophisticated generative AI tools have become. The imposter was able to use publicly available information to create realistic messages.

"While this has so far only been used to impersonate one government official, it underscores the risk of generative AI tools being used to manipulate and commit fraud. The old software world is gone, giving way to a new set of truths defined by AI and global software regulations; as such, the tools to do this are widely available and should start to come under some government regulation to curtail the threat."

Although the Marco Rubio incident does not appear to have had disastrous consequences, it's easy to imagine a similar campaign causing much more damage.

READ MORE: "An AI obedience problem": World's first LLM Scope Violation attack tricks Microsoft Copilot into handing over data

More than a decade ago, a fake tweet claiming Barack Obama had been injured in a bomb attack on the White House wiped $140 billion from the US market. That tactic is rudimentary compared to the growing persuasive power of AI deepfake tools.

This time around, Rubio and the State Department have been left embarassed. But the impact of a future incident could be much, much worse.

Micki Boland, Global Technologist at Check Point Software, said: "This incident marks a new chapter in deepfake-enabled social engineering. The impersonation of a high-level official like Secretary of State Marco Rubio echoes similar attacks, such as the deepfake of Ukraine’s Foreign Minister targeting U.S. Senator Ben Cardin in 2024. While we can’t confirm the actor behind this latest event, the intent likely involves manipulating geopolitical narratives, accessing restricted information, and eroding diplomatic trust.

"Today’s generative AI tools can fabricate highly deceptive audio, video, and even writing, but what makes this attack sophisticated is the calculated use of social engineering: identifying vulnerable targets, training AI models with tailored data, and orchestrating believable misinformation campaigns across social media. These are not random attacks. As I personally describe it, they’re malinformation campaigns - strategic, malicious use of manipulated narratives to deceive and destabilise.

"Organisations equipped with a robust External Risk Management (ERM) solution may be able to detect early warning signs of such attacks, especially if threat actors are using fake or coordinated accounts to amplify malicious narratives and spread malinformation. I’ve studied the real-world applications of these technologies through MIT’s No Code AI and Machine Learning: Building Data Science Solutions course, and it’s clear that threat actors are becoming more creative and capable. Organisations must evolve their detection strategies and prepare for a world where truth can be manufactured in seconds."

Do you have a story or insights to share? Get in touch and let us know.