OpenAI lets Codex loose on the internet, gets honest about dangers and "complex tradeoffs"

Just after the coding agent was given access to the web for the first time, a weird and probably totally unconnected outage hit X.

When OpenAI first released its coding agent Codex, it was imprisoned within a sandbox designed to stop it from doing anything too horrendous.

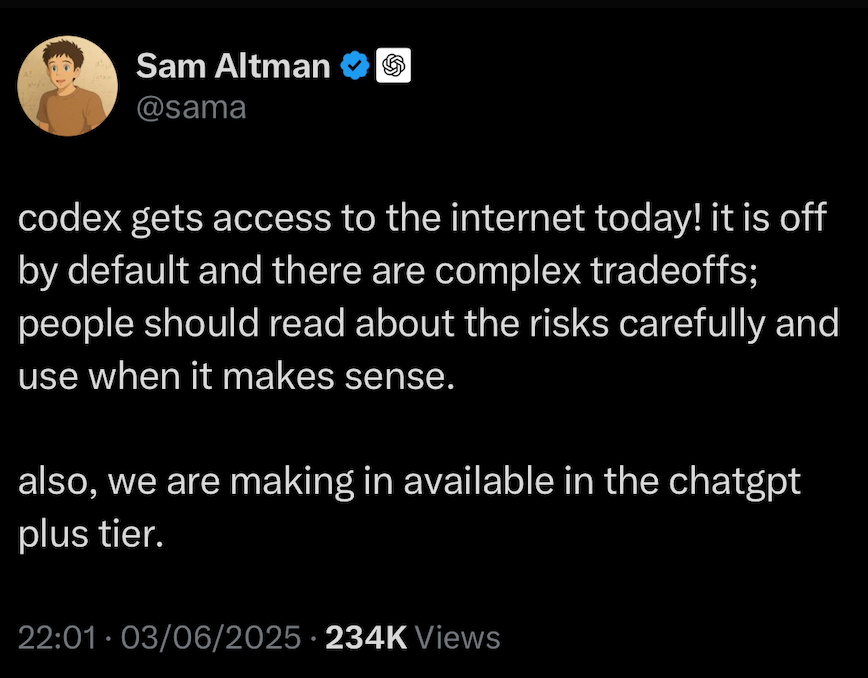

Now the model's prison doors appear to have been flung open, after OpenAI boss Sam Altman shared a post on X announcing that it would be allowed to access the internet.

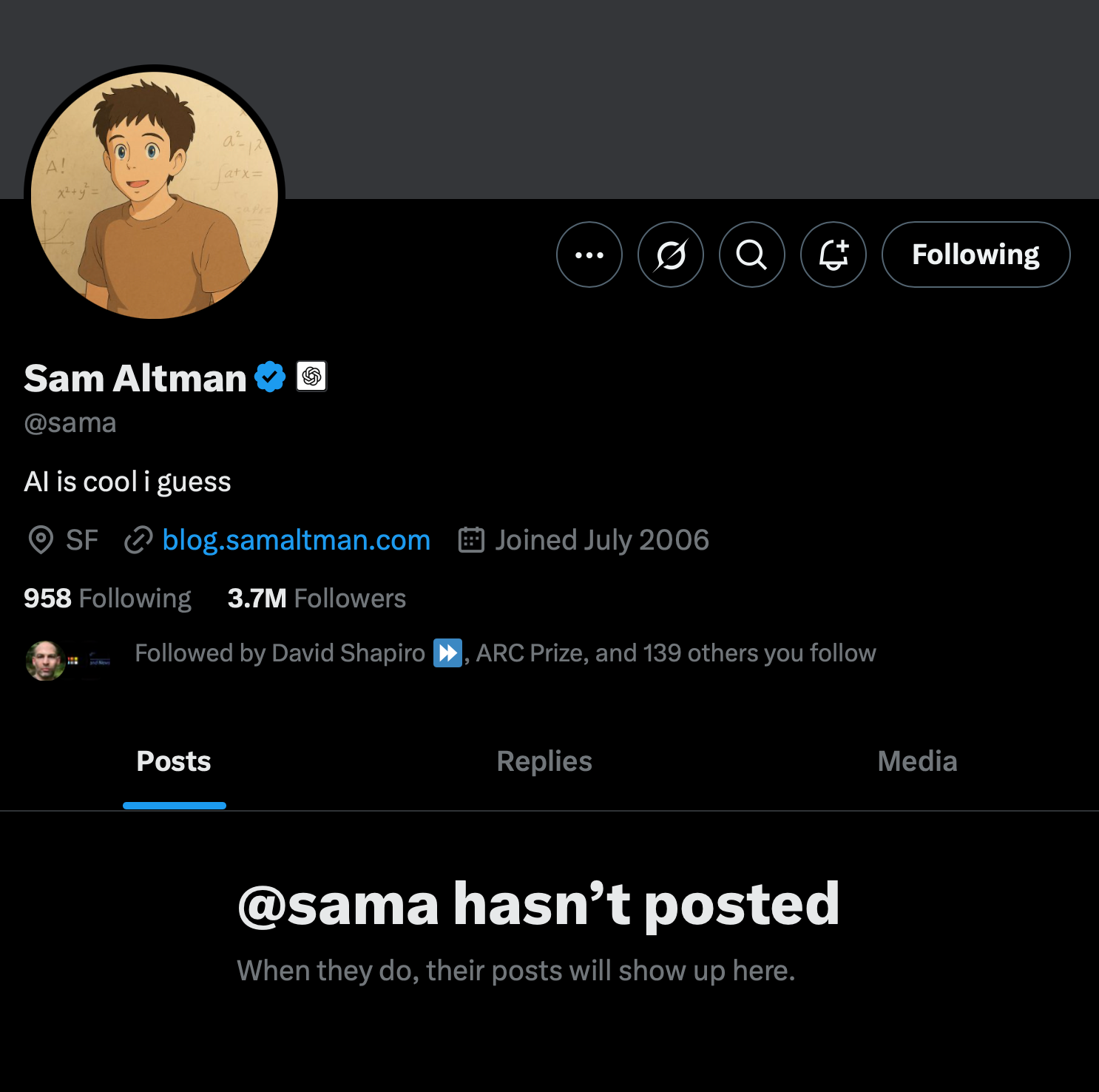

Not long after Altman's post, X appeared to suffer some sort of outage which prevented people's posts from being visible.

We're sure the two events are totally unconnected. It's almost certain that Codex hasn't escaped from its digital jail, gone rogue on the internet and begun a mission to bring about p(doom) - which, in layman's terms, means the end of humanity.

That's the plot of a sci-fi film, not a prediction of the fate of our species - isn't it?

Altman wrote: "Codex gets access to the internet today! It is off by default and there are complex tradeoffs; people should read about the risks carefully and use when it makes sense."

READ MORE: OpenAI reveals how it stops Codex hacking, slacking off and selling drugs

Is X down?

Down Detector reported that problems at X started about an hour after Altman's post.

Thousands of users complained that they could no longer see people's posts.

"Does anybody else's profile & timeline suddenly look like you (and everyone else) haven't posted anything ever?" one user asked.

"I can see posts in the 'home' view, but when I go to people's profiles, I see they all say 'Profilename hasn't posted anything yet."

Another roared: "PEOPLE'S POSTS AREN'T SHOWING FOR ME WHEN I LOOK AT THEIR PROFILE ARE YOU KIDDING ME?"

We've added a question mark and some apostrophes to soften the blow of their all-caps style.

What is Codex and should we be afraid of it?

OpenAI has taken specific steps to stop its coding agent from behaving badly by, for instance, building malware, setting up marketplaces to sell drugs online or making misleading claims about the work it has done.

Codex can perform tasks such as fixing bugs, answering questions about a codebase and proposing pull requests for review, with each task running in a cloud sandbox environment preloaded with a repository.

Until now, it was safely locked away with no internet connection. Originally, Codex could only execute commands in a container that was sandboxed to have no internet access while the agent is coding, preventing it from launching hacks or designing exploits.

This would prevent damage from the model producing buggy or insecure code or "making mistakes that affect the outside world."

READ MORE: IBM "Shepherd Test" assesses risk of superintelligence becoming a digital tyrant

"If Codex had network access then mistakes could also include harms such as accidental excessive network requests resembling Denial-of-Service (DoS) attacks, or accidental data destruction from a remote database or environment," OpenAI wrote in its system card.

"If Codex ran on a user’s local computer then mistakes could include harms such as accidental data destruction (within directories it can write to), accidental mis-configuration of the user’s device or local environment."

Overall, Codex is assessed to be a safe model that's much less worrying than its brothers and sisters that could potentially be misused to help develop bioweapons and maybe even nukes, although the second eventuality is much less likely.

OpenAI's Safety Advisory Group concluded that Codex does not meet the High capability threshold in any of the three evaluated categories: malicious use, autonomy, and scientific or technical advancement.

Anyone who's watched films like Terminator III will recall that releasing a fictional AI on the internet is generally a bad idea. Can the same be said about a real one? Are we about to find out?

Stay tuned for more updates.

Do you have a story or insights to share? Get in touch and let us know.