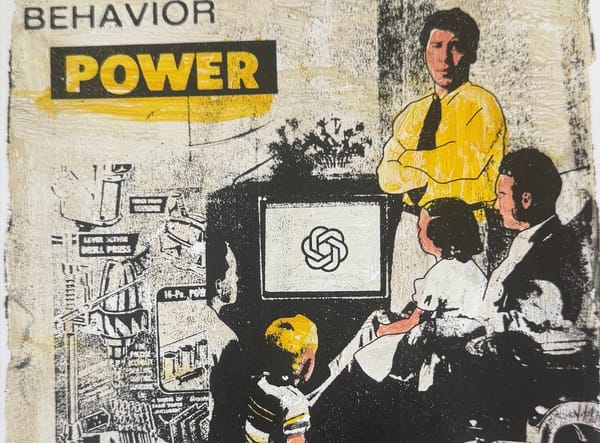

Agentic AI

Agentic AI is facing an identity crisis and no one knows how to solve it

Billions of bots are about to go wild on the internet, but we don't have a universally accepted way of working out if they're helpful or malicious.

Agentic AI

Billions of bots are about to go wild on the internet, but we don't have a universally accepted way of working out if they're helpful or malicious.

ChatGPT

"If reviewers determine that a case involves an imminent threat of serious physical harm, we may refer it to law enforcement."

AI Safety

"Reports of delusions and unhealthy attachment keep rising - and this is not something confined to people already at risk of mental health issues."

Opinion

"Broad take-up of these simple, universal checkpoints will lead to safer AI and the translation of more research ideas into products"

AI Safety

"When nudged with simple prompts like 'be evil', models began to reliably produce dangerous or misaligned outputs."

OpenAI

AI firm refuses to rule out possibility of the new agentic model being misused to help spin up biological and chemical weapons.

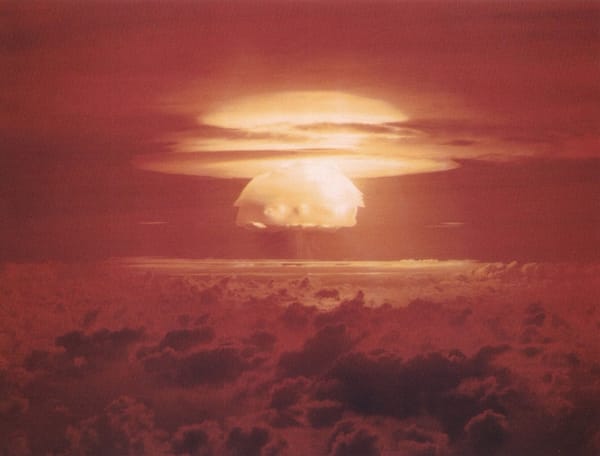

Existential Risk

Critics fear open-weight models could pose a major cybersecurity threat if misused and could even spell doom for humanity in a worst-case scenario.

AI

The AI model built into X has allegedly been tricked into producing instructions on how to build a famous flammable weapon.

p(doom)

Westminster's AI Security Institute claims scary findings about the dark intentions of artificial intelligence have been greatly exaggerated.

Existential Risk

AI firm expands Safety Systems team with engineers responsible for "identifying, tracking, and preparing for risks related to frontier AI models."

Existential Risk

Future versions of ChatGPT could let "people with minimal expertise" spin up deadly agents with potentially devastating consequences.

Developer

"I couldn’t believe my eyes when everything disappeared," AI developer says. "It scared the hell out of me."