Private sector threat actors are trying to clone rivals' AI models, Google warns

Nation-state adversaries are also deploying AI to automate phishing, accelerate attack lifecycles and conduct reconnaissance.

Google has warned that private-sector actors are stepping up efforts to clone commercial AI models via their publicly accessible interfaces.

In an advisory issued ahead of the Munich Security Conference, Google Threat Intelligence Group researchers said they had identified an increase in distillation attacks, also known as model extraction.

Everyday users have little to fear from distillation attacks. But for model developers and service providers, the threat is acute.

Private entities use model extraction attacks to copy "proprietary logic" - the behaviour encoded in a model’s weights and tuning - and "specialised training", which are both high-value targets.

"Historically, adversaries seeking to steal high-tech capabilities used conventional computer-enabled intrusion operations to compromise organisations and steal data containing trade secrets," Google Threat Intelligence Group wrote.

"For many AI technologies where LLMs are offered as services, this approach is no longer required; actors can use legitimate API access to attempt to 'clone' select AI model capabilities."

Google has not observed direct attacks on frontier models or advanced persistent threat (APT) actors building their own generative AI products. However, it "observed and mitigated frequent model extraction attacks from private sector entities all over the world and researchers seeking to clone proprietary logic".

What are model extraction attacks?

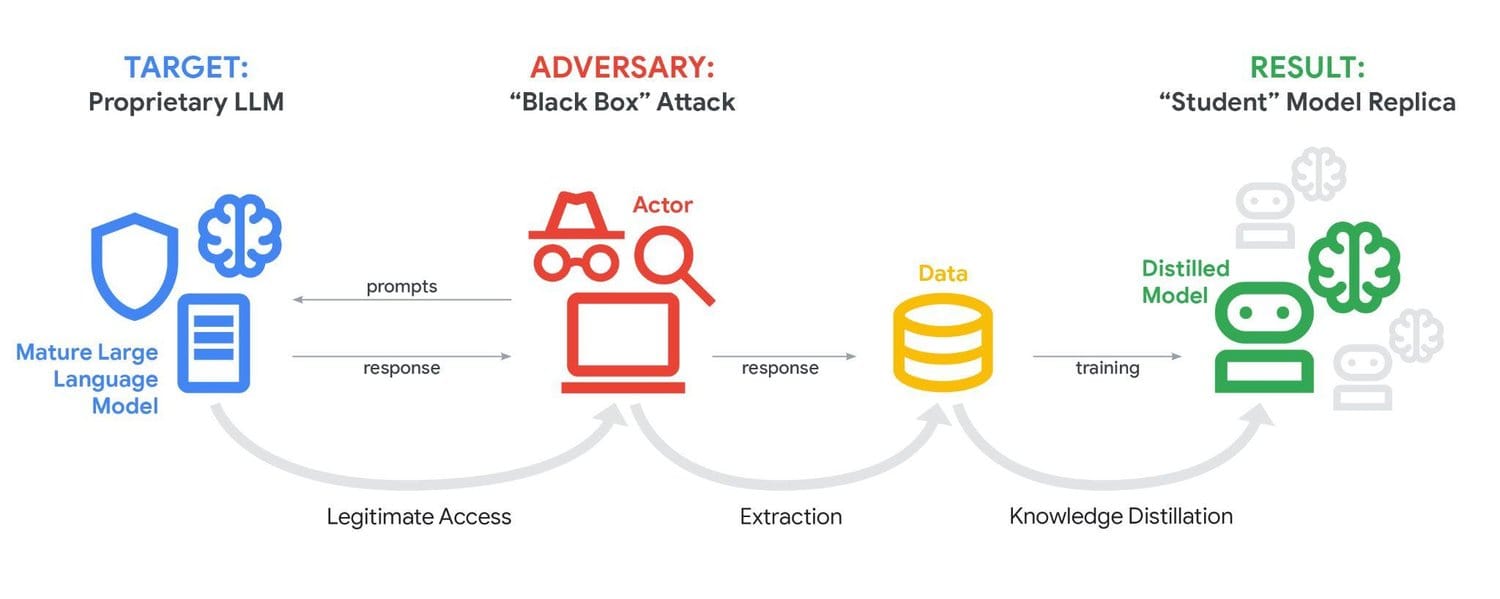

These attacks leverage legitimate access to systematically probe a mature AI model and harvest enough outputs to recreate its behaviour. In other words, stealing the behaviour of one model and using this info to enhance the capabilities of another.

This is typically achieved through knowledge distillation, in which a new “student” model is trained on responses from an existing “teacher” model.

In practice, the technique lets attackers accelerate model development at a fraction of the cost, effectively turning someone else’s AI into a shortcut for building a competing product - a form of intellectual property theft.

READ MORE: Careless tech costs lives: UK warned to prepare for "severe" attacks on critical infrastructure

Distillation itself isn’t inherently malicious - it’s a standard machine learning technique, and Google even sells tools for it. But distilling from Gemini without permission violates Google’s Terms of Service, and the company said it is developing ways to detect and mitigate such attempts.

Google said Gemini is a target because of its "exceptional reasoning capability". Researchers identified more than 100,000 prompts across multiple languages designed to achieve malicious goals.

Attackers were observed attempting to coerce the model into disclosing its full reasoning processes, which are typically hidden from ordinary users.

Google advised organisations that provide AI models to monitor API access carefully for traces of extraction or distillation patterns. It gave the example of a financial services provider's model being targeted by a competitor seeking to rip off research to create a derivative product.

How are nation-state threat actors using GenAI?

Alongside model extraction threats, the Google Threat Intelligence Group also found that government-backed actors are using its Gemini AI to accelerate attack lifecycles, speed reconnaissance, conduct social engineering and support malware development.

"A consistent finding over the past year is that government-backed attackers misuse Gemini for coding and scripting tasks, gathering information about potential targets, researching publicly known vulnerabilities, and enabling post-compromise activities," they wrote.

This includes AI-augmented phishing, in which LLMs are used to generate highly personalised lures that are not only more effective but also quicker to produce than traditional phishing content.

AI-generated phishing material is now much less likely to be peppered with telltale spelling mistakes, making it harder to identify and avoid. LLMs can also be used in "rapport-building phishing," involving long conversations over multiple turns that take place long before the payload is delivered.

"By lowering the barrier to entry for non-native speakers and automating the creation of high-quality content, adversaries can largely erase those 'tells' and improve the effectiveness of their social engineering efforts," Google advised.

LLMs also serve as a "strategic force multiplier during the reconnaissance phase of an attack", allowing threat actors to rapidly gather open-source intelligence (OSINT) about the targets, identify key decision-makers and map organisational hierarchies.

READ MORE: OpenAI killed the sycophantic GPT-4o and people lost their s*** in the most bizarre way

Additionally, researchers observed threat actors using agentic AI capabilities to support campaigns by, for example, prompting Gemini with an expert cybersecurity persona - predicting that this threat is likely to increase as more tools and services enabling agentic capabilities become available via underground markets.

Google specifically identified activities from these actors:

⁉️ UNC6418: An unattributed threat actor that used Gemini to gather sensitive emails and credentials, then launch phishing campaigns targeting Ukraine and defence targets.

🇨🇳 Temp.HEX: A China-linked group that deployed Gemini and other AI tools to profile individuals (including in Pakistan) and map separatist groups, later folding similar targets into its wider operations.

🇮🇷 APT42: Iranian threat actors used Gemini in research campaigns to search for official emails and conduct reconnaissance on the business partners of targets to "establish a credible pretext for an approach". Gemini also enabled fast translation in and out of local languages.

🇰🇵 UNC2970: North Korea's government-backed UNC2970 focused on defence targeting and impersonating corporate recruiters. It used Gemini to synthesise OSINT and profile high-value targets. Its target profiling included searching for information on major cybersecurity and defence companies and mapping specific technical job roles and salary information.

READ MORE: Security is tired of alert fatigue: Will AI finally let SOCs get some well-earned rest?

Commenting on the research, Dr Ilia Kolochenko, CEO at ImmuniWeb and fellow of the British Computer Society (BCS), questioned the claim that hackers were increasingly using Gemini and said: "This seems to be a poorly orchestrated PR of Google’s AI technology amid the fading interest and growing disappointment of investors in GenAI.

"Even if APTs utilise GenAI in their cyber-attacks, it does not mean that GenAI has finally become good enough to create sophisticated malware or execute the full cyber kill chain of an attack. GenAI can indeed accelerate and automate some simple processes – even for APT groups – but it has nothing to do with the sensationalised conclusions about the alleged omnipotence of GenAI in hacking."

Javvad Malik, Lead Security Awareness Advocate at KnowBe4, also said: "This is no real surprise. Bad actors are not unlike traditional businesses and will adopt whatever reduces effort, improves scale, or increases return on investment. AI doesn't fundamentally change their motivation - but it does lower the barrier to entry.

"From an organisational perspective, this opens up more challenges. No longer can people spot bad grammar or spelling, or even find some protection in a non-English language. So they need more layered controls which can protect staff and empower them to easily question anything which looks suspicious.

"Assume AI will be used against you, and design controls that don’t rely on humans being perfect every time".

Read the full report here for details of mitigations and indicators of compromise.